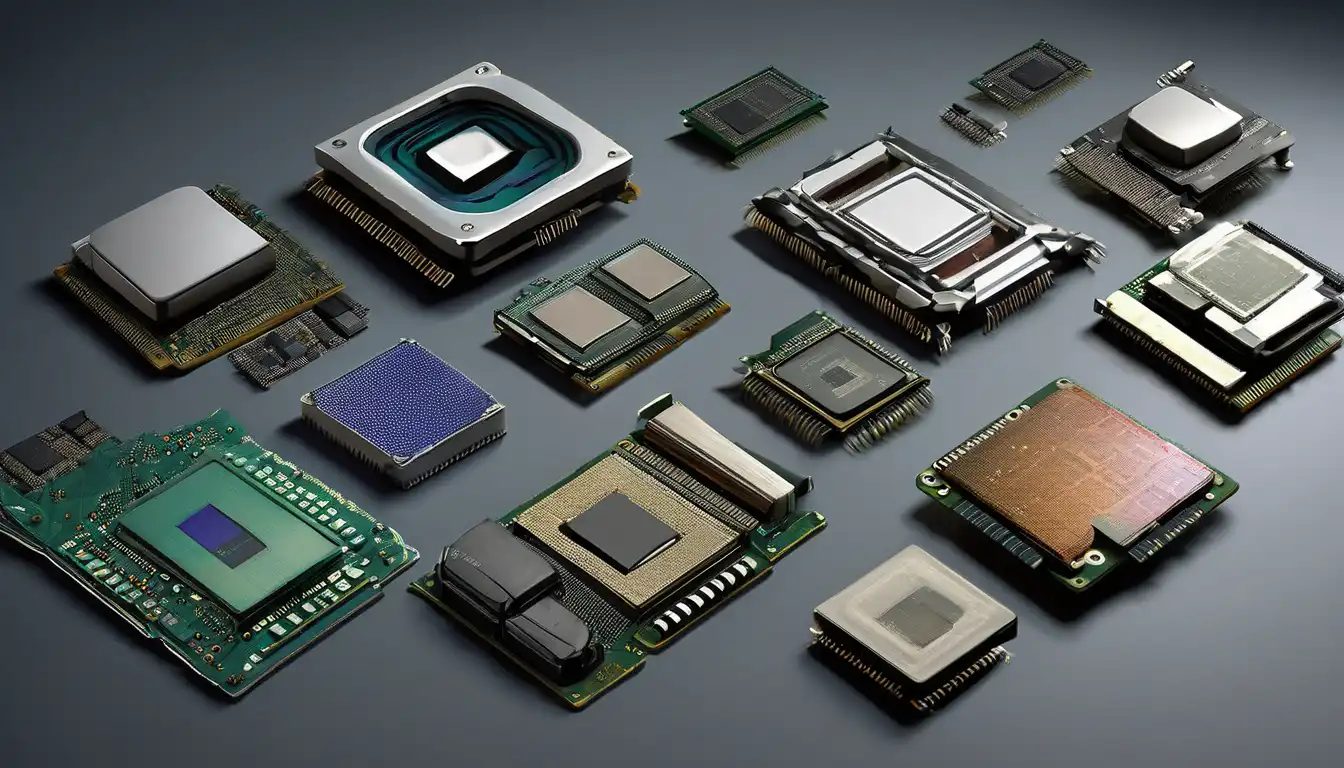

The Dawn of Computing: Early Processor Beginnings

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with massive vacuum tube systems that occupied entire rooms, processors have transformed into microscopic marvels capable of billions of calculations per second. This transformation didn't happen overnight but through decades of innovation, competition, and relentless pursuit of efficiency.

In the 1940s and 1950s, early computers like ENIAC used vacuum tubes as their primary processing components. These systems were enormous, consuming vast amounts of electricity and generating significant heat. A single processor of this era might contain thousands of vacuum tubes, each representing a basic switching element. The limitations were obvious: reliability issues, massive power requirements, and physical size constraints made these early processors impractical for widespread use.

The Transistor Revolution

The invention of the transistor in 1947 marked the first major turning point in processor evolution. Bell Labs researchers John Bardeen, Walter Brattain, and William Shockley developed this semiconductor device that could amplify or switch electronic signals. Transistors were smaller, more reliable, and consumed significantly less power than vacuum tubes. This breakthrough paved the way for the second generation of computers in the late 1950s and early 1960s.

Transistor-based processors enabled computers to become more compact and affordable. IBM's 7000 series and DEC's PDP computers demonstrated the practical advantages of transistor technology. However, each transistor still had to be manufactured and connected individually, limiting the complexity and speed of these early integrated circuits.

The Integrated Circuit Era

The development of the integrated circuit (IC) in 1958 by Jack Kilby at Texas Instruments, and independently by Robert Noyce at Fairchild Semiconductor, revolutionized processor design. ICs allowed multiple transistors to be fabricated on a single silicon chip, dramatically reducing size and cost while improving reliability. This innovation led to the third generation of computers in the 1960s.

Early integrated circuits contained only a few transistors, but the technology advanced rapidly. By the mid-1960s, manufacturers could produce chips with dozens of components. The real breakthrough came with the development of MOS (Metal-Oxide-Semiconductor) technology, which enabled higher density and lower power consumption. These advances set the stage for the microprocessor revolution that would follow.

The Birth of the Microprocessor

1971 marked a pivotal moment in processor history with Intel's introduction of the 4004, the world's first commercially available microprocessor. Designed by Ted Hoff, Federico Faggin, and Stanley Mazor, the 4004 contained 2,300 transistors and operated at 740 kHz. Though primitive by today's standards, it demonstrated that an entire central processing unit could fit on a single chip.

The success of the 4004 led to more powerful processors like the Intel 8008 and 8080, which formed the foundation of early personal computers. Competitors quickly entered the market, with companies like Motorola and Zilog developing their own microprocessors. The Z80 processor, for example, became extremely popular in early home computers and embedded systems.

The x86 Architecture Dominance

Intel's 8086 processor, introduced in 1978, established the x86 architecture that would dominate personal computing for decades. The 8086's 16-bit design offered significant performance improvements over earlier 8-bit processors. When IBM selected the Intel 8088 (a variant of the 8086) for its first personal computer in 1981, it cemented x86 as the industry standard.

The 1980s saw rapid advancement in x86 processors with the 80286, 80386, and 80486. Each generation brought higher clock speeds, more transistors, and new features like protected mode operation and built-in math coprocessors. AMD emerged as a significant competitor, producing x86-compatible processors that challenged Intel's dominance and drove innovation through competition.

The Pentium Era and Beyond

Intel's introduction of the Pentium processor in 1993 marked another milestone. The Pentium featured superscalar architecture, allowing it to execute multiple instructions per clock cycle. Clock speeds jumped from 60 MHz to over 200 MHz within a few years, while transistor counts soared into the millions.

The late 1990s and early 2000s brought further innovations including MMX technology for multimedia applications, increased cache sizes, and the transition to smaller manufacturing processes. AMD's Athlon processors challenged Intel's performance leadership, while both companies pushed clock speeds beyond 1 GHz. The competition led to rapid performance improvements but also created significant heat and power consumption challenges.

The Multi-Core Revolution

By the mid-2000s, processor designers faced physical limitations that prevented further increases in clock speed. The solution emerged in the form of multi-core processors, which placed multiple processing cores on a single chip. Intel's Core 2 Duo and AMD's Athlon 64 X2 processors demonstrated that parallel processing could deliver performance gains without requiring higher clock speeds.

Multi-core technology has continued to evolve, with modern processors containing dozens of cores optimized for different types of workloads. High-core-count processors now power everything from smartphones to supercomputers, enabling unprecedented levels of parallel processing. This approach has proven essential for handling modern computing tasks like artificial intelligence, video processing, and scientific simulations.

Specialized Processing and AI Acceleration

Recent years have seen a shift toward specialized processing units optimized for specific tasks. Graphics Processing Units (GPUs), originally designed for rendering graphics, have become essential for parallel computing tasks. Companies like NVIDIA and AMD have developed GPUs capable of handling complex mathematical operations far more efficiently than traditional CPUs.

The rise of artificial intelligence has driven development of dedicated AI accelerators like Google's Tensor Processing Units (TPUs) and various neural processing units (NPUs). These specialized processors are optimized for the matrix operations fundamental to machine learning algorithms. The trend toward heterogeneous computing, combining different types of processors optimized for specific workloads, represents the current frontier of processor evolution.

Future Directions: Quantum and Neuromorphic Computing

Looking ahead, processor technology continues to evolve in exciting new directions. Quantum computing represents a fundamentally different approach to processing information, using quantum bits (qubits) that can exist in multiple states simultaneously. While still in early stages, quantum processors have demonstrated potential for solving certain types of problems that are intractable for classical computers.

Neuromorphic computing, inspired by the human brain, represents another promising direction. These processors mimic the brain's neural structure, potentially offering massive parallelism and energy efficiency for cognitive computing tasks. Companies like Intel and IBM are actively developing neuromorphic chips that could revolutionize artificial intelligence applications.

The evolution of computer processors demonstrates an incredible trajectory of innovation, from room-sized vacuum tube systems to microscopic chips containing billions of transistors. Each generation has built upon the previous, driven by Moore's Law and relentless engineering innovation. As we look toward quantum and neuromorphic computing, it's clear that the processor evolution story is far from over.

Understanding this history helps appreciate the incredible technology we use daily and provides context for future developments. The journey from simple switching elements to complex parallel processing systems represents one of humanity's greatest technological achievements, with implications that continue to reshape our world.